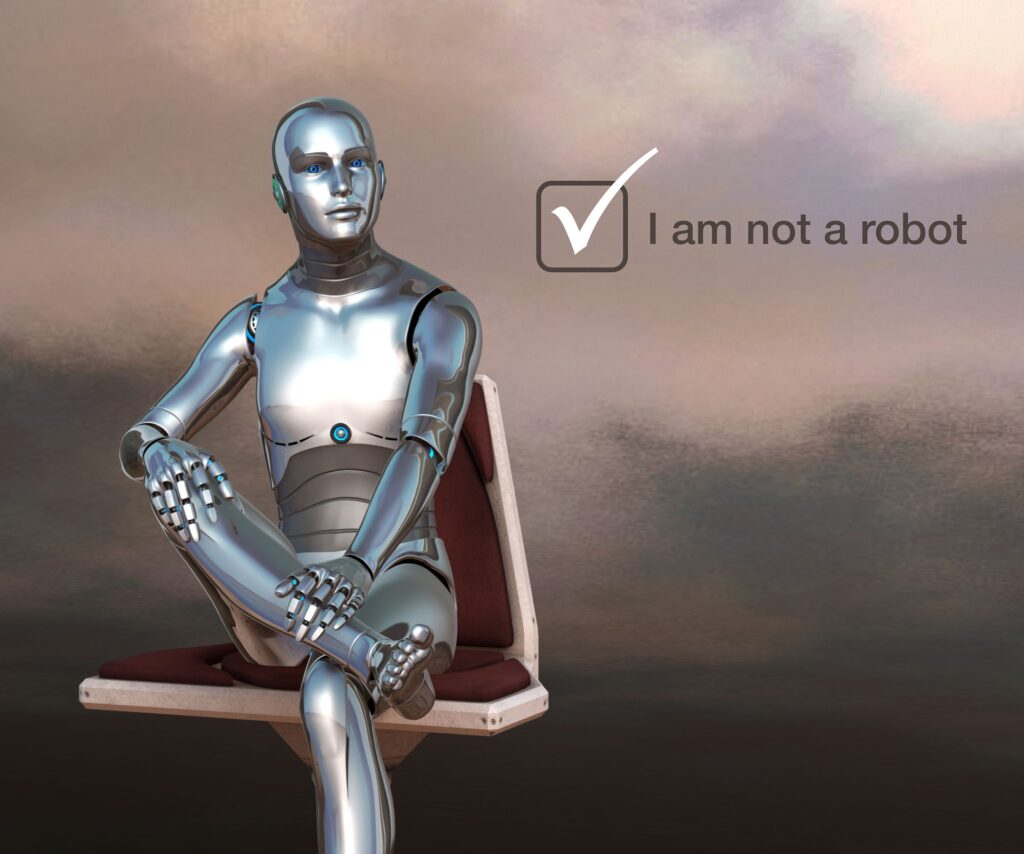

Web scraping large-scale websites often comes with a serious hurdle: CAPTCHA challenges. Short for “Completely Automated Public Turing test to tell Computers and Humans Apart,” CAPTCHA is designed to block automated access and verify that a real human is interacting with the site.

In this post, I will walk you through:

- Why CAPTCHAs appear

- The manual approach I used in my scraper for my project

- Popular automated techniques: third-party CAPTCHA solvers and ML-based tools

- The ethical and legal considerations of solving CAPTCHAs

Table of Contents

Why Do Websites Use CAPTCHA?

Websites deploy CAPTCHAs primarily to:

- Throttle bots sending excessive requests

- Protect login and signup forms from abuse

- Prevent scraping of valuable or protected data

- Block suspicious browsing patterns (e.g., rapid requests from the same IP)

For web scrapers, this often leads to blocked sessions, broken workflows, or pages that don’t load fully unless a CAPTCHA is solved.

Option 1 : Manual CAPTCHA Solving

I used a manual approach to solve CAPTCHAs in my academic project as I barely encountered them because I used respectful web scraping practices. This included reputed IP rotation, humanized web browsing patterns, API-based parsing, browsing only the public and allowed URLs, not sending too many requests from the same IP, and so on. Because of these scraping techniques, I rarely triggered CAPTCHAs. But for the rare occasions it did appear, I added a functionality to solve it manually. I used the approach as outlined below:

- Used paid datacenter proxies from a reputable provider (Smartproxy) with geolocation set to match the target domain, reducing the chance of IP bans

- Implemented IP rotation and session-based fingerprinting to simulate real user sessions (Learn more: IP Rotation Using Smartproxy)

- Rotated user-agents and injected fingerprint evasion techniques (Read: User-Agent Rotation Guide)

- Added randomized human-like delays and natural navigation behavior like scrolling and clicking

- Used API-based scraping approach

# Simplified example

driver.get("https://abcd.com.au")

print("Solve CAPTCHA if shown...")

input("Press Enter after solving manually...")Pseudo Code:

# Check if CAPTCHA is present (e.g., from Incapsula or other bot protection)

function is_captcha_present(driver):

return (

"_incapsula_" in current_url OR

page contains iframe with '_Incapsula_Resource' OR

page source includes keyword 'captcha'

)

# Wait and let human solve the CAPTCHA manually before proceeding

function wait_for_captcha(driver, timeout):

print("CAPTCHA challenge detected. Please solve it in the browser...")

until timeout seconds:

if CAPTCHA iframe disappears:

print("CAPTCHA solved. Resuming scraper.")

break

else:

wait and recheck

if still not solved:

print("Timeout. CAPTCHA was not solved.")This helped to:

- Avoid breaching site terms by bypassing security mechanisms automatically

- Continue our scraper flow seamlessly after human verification

- Maintain full session context after CAPTCHA is solved

Option 2: Third-Party CAPTCHA Solving APIs

For high-volume scraping or automation pipelines, services like the following are often used:

| API Provider | Supported CAPTCHAs | Notes |

| 2Captcha | reCAPTCHA, hCaptcha | Human-based, pay-per-solve |

| Anti-Captcha | reCAPTCHA, FunCaptcha | API token injection supported |

These services usually:

- Receive the CAPTCHA challenge (image or sitekey)

- Solve it using either humans or models

- Return a solution token to be used in the form submission or browser page

Caution: Use these services only when permitted by the target site’s terms or for testing on your own properties.

Option 3: Machine Learning-Based CAPTCHA Solving

Some developers use OCR or deep learning for basic CAPTCHA challenges like distorted text or image classification.

Typical ML approaches:

- Tesseract OCR for traditional text CAPTCHAs

- Convolutional Neural Networks (CNNs) trained on labeled CAPTCHA image datasets

- Object detection for click-based or image-select puzzles

However, solving modern CAPTCHA systems like reCAPTCHA v2/v3, hCaptcha, or Cloudflare’s Turnstile is extremely difficult, and training such models is resource-heavy and ethically questionable.

Legal and Ethical Considerations

Automated CAPTCHA bypass can be considered a violation if it attempts to deceive or exploit a security mechanism and violate:

- Terms of Service of the target website

- Anti-circumvention provisions under laws

- Broader computer misuse and unauthorized access laws

Our scraping process strictly adhered to the principle of respectful automation, including:

- Complying with robots.txt and not scraping protected routes

- Limiting scraping frequency using randomized delays

- Handling CAPTCHAs manually rather than trying to break them

Conclusion

CAPTCHA challenges are inevitable when scraping protected sites but how you handle them defines whether your scraper is respectful or reckless. By combining stealth and respectful scraping practices like IP rotation, limiting scraping frequency, scraping when the website experiences less traffic, humanized patterns, and son on, we can create resilient and ethical scrapers without stepping into legal gray zones. Stay human. Stay ethical. And if a CAPTCHA says hello — take it as a reminder to slow down.

Related Posts

- Ethical Web Scraping: 9 Smart Techniques for Responsible Automation

- Coles Web Scraper Breakdown: 6 Powerful Strategies I Used in My Capstone Project for Scraping Pricing Data

- User-Agent Rotation Guide: 4 Techniques to Avoid Getting Blocked

Shishir Dhakal is a former Software Quality Assurance Engineer and a current postgraduate student in Information Technology Management at Deakin University, Australia. With over 3 years of industry experience, he has worked across software testing, performance engineering, and browser automation. Shishir holds a Bachelor’s degree in Information Management from Nepal and has contributed to large-scale software projects across cloud platforms and enterprise systems. Specializing in tools like Selenium, JMeter, Python, and modern QA practices with passion for quality, performance, and responsible automation, he shares practical guides and real-world insights spanning web scraping, load testing, and software engineering. Connect with him on LinkedIn or explore his technical blog for tutorials and walkthroughs.